Part 2 – Definitions

|

Decimal Arithmetic FAQ

Part 2 – Definitions |

|

Algorism is the name for the Indo-Arabic decimal system of writing and working with numbers, in which symbols (the ten digits 0 through 9) are used to describe values using a place value system, where each symbol has ten times the weight of the one to its right.

This system was originally invented in India in the 6th century AD (or earlier), and was soon adopted in Persia and in the Arab world. Persian and Arabian mathematicians made many contributions (including the concept of the decimal fractions as an extension of the notation), and the written European form of the digits is derived from the ghubar (sand-table or dust-table) numerals used in north-west Africa and Spain.

The word algorism comes from the Arabic al-Kowarizmi (“the one from Kowarizm”), the cognomen (nickname) of an early-9th-century mathematician, possibly from what is now Khiva in western Uzbekistan. (From whose name also comes the word algorithm.)

See also Wikipedia: Algorism.

In English, the word precision generally means the state of being precise, or defines the repeatability of a measurement etc.

In computing and arithmetic, it has some more specific (and different) meanings (the first is the most common):

A calculation which rounds to three digits is said to have a working precision or rounding precision of 3.

See also Wikipedia: Precision.

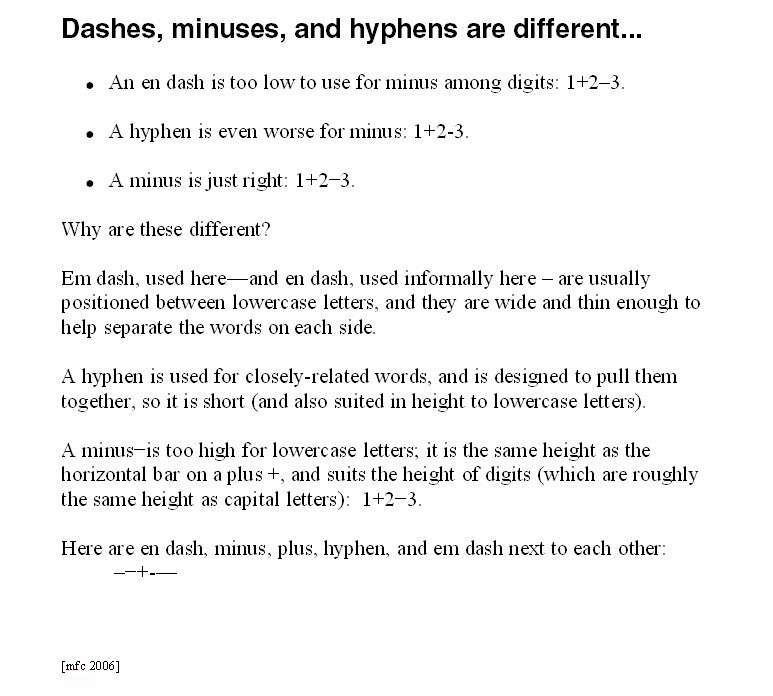

A dash or a hyphen is vertically positioned lower than a minus because the latter is designed to be used alongside digits (which are typically the same height as capital letters) whereas dashes usually appear between lowercase letters.

“A picture is worth a thousand words” (and in this case

is the only way to guarantee the differences are shown correctly):

These terms are derived or extrapolated from the IEEE 754 and 854 standards, and describe the various kinds of numbers that can be represented in a given computer encoding: The answers in Part 5 of the FAQ explain in more detail the meaning of some of the terms described here:

Here’s a table illustrating the terms above, just showing positive numbers. The symbols on the left are sometimes used to refer to certain values. Example values are shown on the right are for the 32-bit decimal encoding with 7 digits of precision (decimal32).

| Symbol | Name | Range | Example |

| Overflow threshold | Supernormal number | 10E+96 | |

| Nmax | Largest normal number

(Maximum representable number) | Normal numbers | 9.999999E+96 |

| Unity | One | 1 (1E+0) | |

| Nmin | Smallest normal number

(Underflow threshold) | 1E-95 | |

| Largest subnormal number | Subnormal numbers | 0.999999E-95 | |

| Ntiny | Smallest subnormal number

(Minimum representable number) | 1E-101 |

Note that the example value of Ntiny could be written 0.000001E-95. The exponent of Nmax when written in scientific notation (+96 in the example) characterizes an encoding, and is called Emax. The exponent of the smallest normal number, -Emax+1, is called Emin, and the smallest possible exponent seen when a number is written in scientific notation (-101 in the example) is called Etiny. Etiny is Emin-(p-1), where p is the precision of the encoding (7 in these examples).

For more on the ordering of decimal numbers, see Which is larger? 7.5 or 7.500?

| Please send any comments or corrections to Mike Cowlishaw, mfc@speleotrove.com |

|

Copyright © IBM Corporation 2000, 2007. All rights reserved.

|